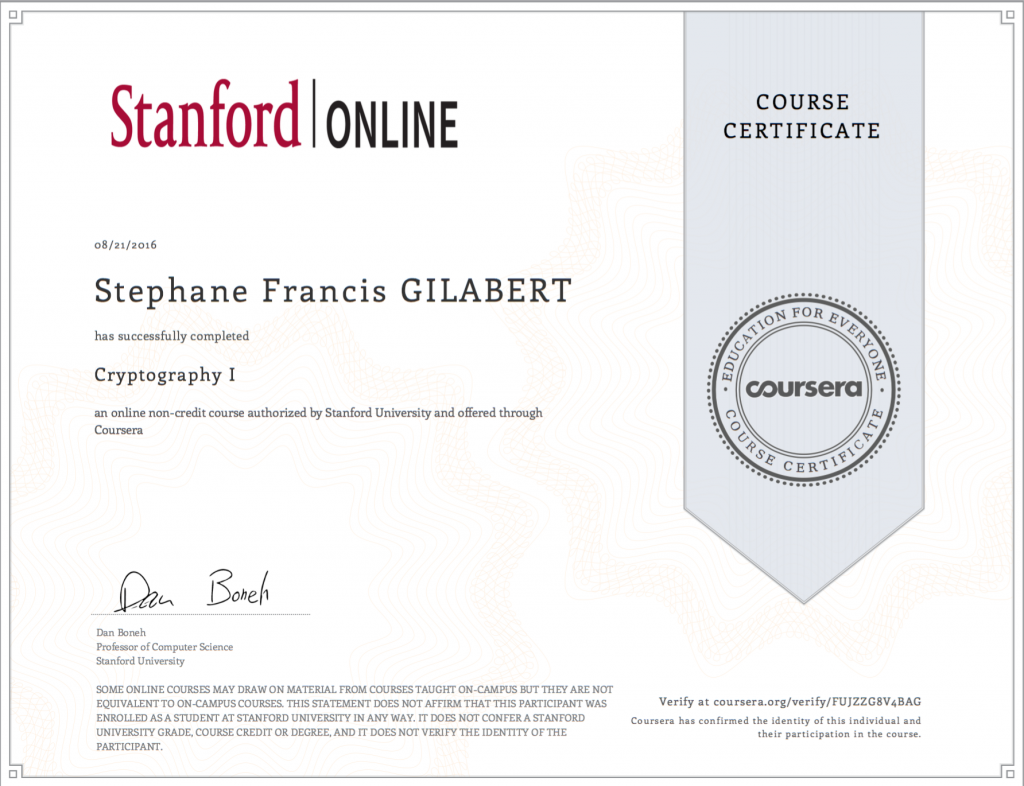

Today, I successfully completed a course on cryptography.

Cryptography is the cornerstone of information security and modern communications, and I used it on a daily basis throughout my life and career, most often without being even aware of it ! Its applications are expanding at a pace never seen before with the advent of Internet technologies. While encrypting data is anything but new (ciphers existed way back in the Ancient times), these last few decades transformed cryptography from an art into a genuine science. Formal definitions and assumptions are now rigorously established, from which ciphers can be constructed with mathematically-proven security derived from algebra and number theory. Cryptography is the field of intense active research focused on bullet-proofing existing protocols and creating new ones for new applications.

The exponential increase of computing performance has driven many protocols to become obsolete. The Data Encryption Standard (DES) became notoriously unsecure in 1999 when its 56-bit key became vulnerable to brute-force attacks, and had to be replaced by the Advanced Encryption Standard (AES). Some other encryption schemes were poorly designed, because cryptography science was not as advanced as today, or because the designers just made mistakes. This was probably the case with Wireless Encryption Protocol (WEP), with its multiple weaknesses that are now given as a good case-study of what not to do for students. Besides design, implementation is equally important and can turn a provenly secure cipher into a totally unsecure protocol. And many examples exist in real life, like the padding oracle attack on authenticated encryption.

In practice, the best advice for a reliable encryption is to always use public, open-source and updated crypto-libraries from reliable and well-established providers. However, it is worth to keep in mind that the security of a cipher erodes over time, as computing performance and attacker skill both increase, which represents a real challenge for cryptographers. In fact, the right question for selecting an encryption scheme is not whether the cipher will be decrypted or not, but when, and if this amount of time is acceptable or not for the application. For a long-lifetime secret is more costly than a short-lifetime one, and is not always needed. And the answer to the afore mentioned question is only an estimation. The rise of a disruptive technology like quantum computing may completely wreak havoc in existing secret documents in a much shorter time than expected…